Artificial Intelligence

Pattern Recognition

Neural Networks are used in applications like Facial Recognition.

These applications use Pattern Recognition.

This type of Pattern Classification can be done with a perceptron.

Simply explained, neural networks are multi layer perceptrons.

Pattern Classification

The basis of pattern classification can be demonstrated with a simple perceptron.

Imagine a strait line (a linear graph) in a space with scattered x y points.

How can you classify the points over and under the line?

A perceptron can be trained to recognize the points on each side of the line.

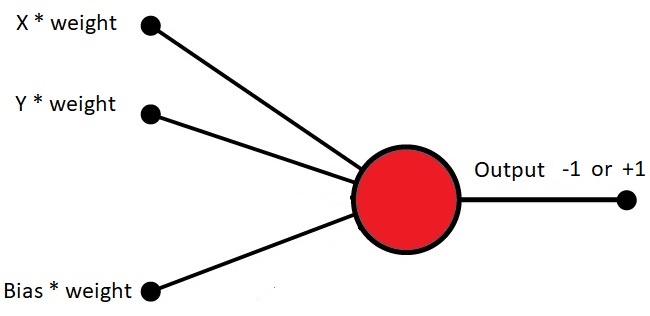

It will need two inputs: the x and y coordinates of a point.

Each input should have a weight between -1 and +1.

The neuron (the red thing) should process x * weight + y * weight.

With correct weights, it should return +1 or -1 (over the line or under the line):

The Bias

If x = 0 and y = 0, the neuron will return 0.

To avoid this problem, it needs a third input with the value of 1 (this is called a Bias).

The Perceptron Algorithm

- Multiply all inputs with their Weights

- Sum all weighted inputs

- Pass the sum to an Activation function

Assume you have two Inputs:

const inputs = [100,300]; // x,y values

const weights = [0.74,-0.53]; // x,y weights

Add a Bias:

inputs[2] = 1;

weights[2] = Math.random() * 2 - 1;

Pass the inputs and the weights to a Sum Function:

function sum(inputs, weighs) {

let sum = 0;

for (let i = 0; i < inputs.length; i++) {

sum += inputs[i] * weights[i];

}

return sum;

}

Pass the sum to an Activation Function:

The Neuron is Guessing

In the previous example, the output from the neuron is Not Correct.

The neuron is using random weights. The neuron is just "guessing".

It needs adjustments. It needs to be trained.

This Perceptron is Guessing:

This Perceptron is Trained:

Backpropagation

After each guess, the perceptron calculates how wrong the guess was.

If the guess is wrong, the perceptron adjusts the bias and the weights so that the guess will be a little bit more correct the next time.

This type of learning is called backpropagation.

After trying (a few thousand times) your perceptron will become quite good at guessing.